Why GPT-5 Thinking Mode Beats Claude 4 Opus for Brainstorming PRDs (And It’s Not Even Close)

A few weeks ago, I wrote about “Stop Asking Claude Code to ‘Build Me an App’ – Here’s How to Actually Get What You Want”, where I shared my PRD brainstorming workflow with Claude 4 Opus.

I thought I had the perfect system.

Then GPT-5 thinking mode entered the scene…

This is where I realized I’d been settling for “good enough” when “exceptional” was possible.

After running the exact same PRD brainstorming session through both AIs, the difference was shocking.

Let me show you why GPT-5 thinking mode has completely replaced Claude for my requirements gathering.

.

.

.

The Test: Same Project, Two Different AIs

I gave both AIs the identical starting prompt:

(Click here for my complete PRD brainstorming prompt)

Both were instructed to iterate with me until we had a complete PRD.

What happened next revealed everything.

.

.

.

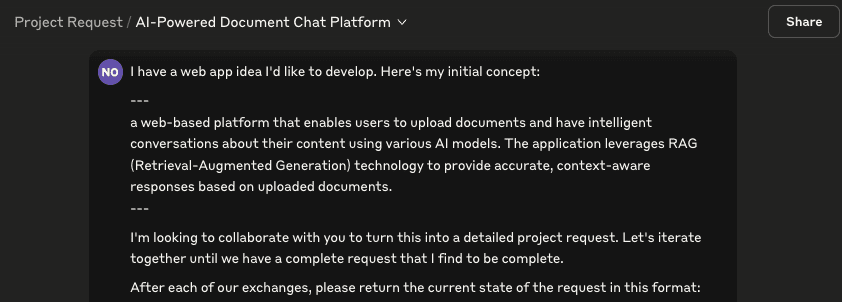

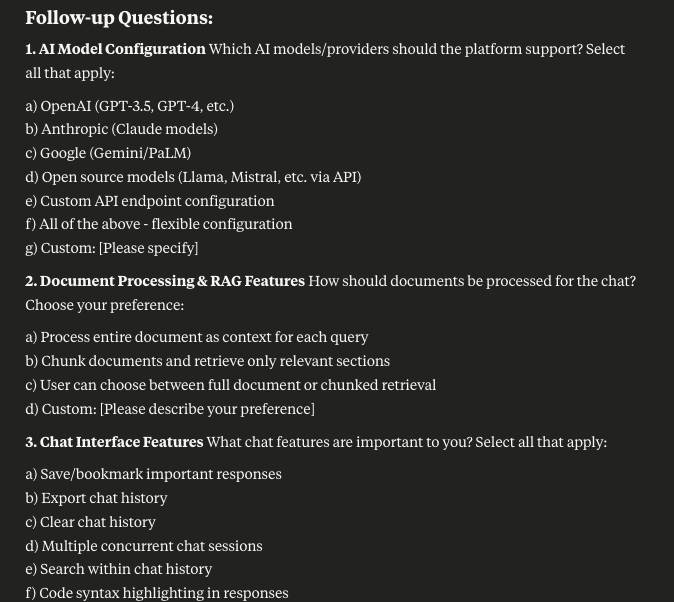

The Thoroughness Gap: 14 Questions vs 6

GPT-5’s opening move: 14 meticulously organized questions across 7 categories:

- Audience & Use Cases

- Content Scope

- Collaboration & Sharing

- Pricing & Packaging

- Branding & UX

- Compliance & Security

- Differentiators

Claude’s opening: 6 questions, loosely organized.

But it wasn’t just the quantity – it was the quality of organization.

GPT-5 grouped related decisions together.

Want to define your audience?

Here are all the audience-related questions in one section.

Ready to tackle content?

Here’s everything about documents and formats.

Claude mixed concerns.

Document formats in question 2, collaboration in question 6, with pricing wedged in between.

The result?

With GPT-5, I made coherent, related decisions.

With Claude, I was jumping between unrelated topics, losing context with each switch.

.

.

.

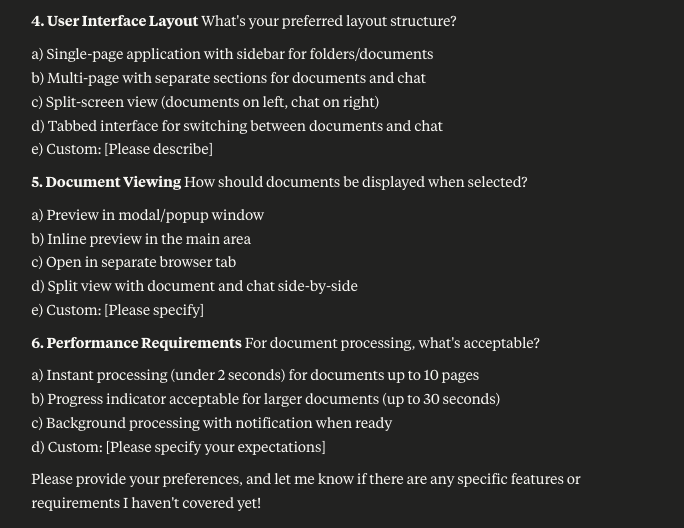

The Detail Devil: GPT-5 Never Lets Anything Slide

Here’s where it gets really interesting.

When I told GPT-5 I wanted to “summarize long PDFs” but then said “text and markdown only” for file types, it immediately caught the contradiction:

It then offered three ways to resolve this:

- a) Replace the use case with “Summarize long text/Markdown files”

- b) Keep the use case but clarify PDF conversion approach

- c) Custom solution

Claude? Missed it entirely. Just updated the requirements with the contradiction intact.

This happened repeatedly.

GPT-5 caught every inconsistency, every gap, every ambiguity.

It was like having a senior product manager reviewing my requirements in real-time.

.

.

.

Join The Art of Vibe Coding—short, practical emails on shipping with AI (without the chaos).

No spam. Unsubscribe anytime. Seriously.

Want techniques like these weekly?

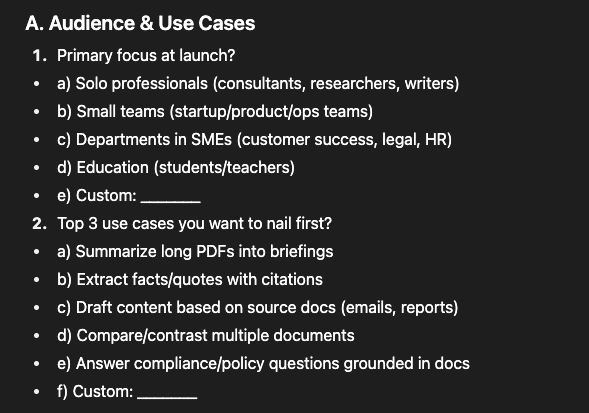

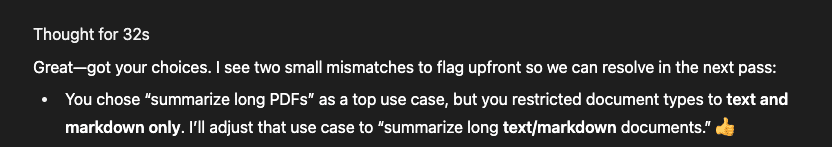

The Pattern Consistency: Options All The Way Down

This drove me crazy with Claude.

Claude’s pattern breaking:

- Question 1: Gives options (a, b, c, d, e, f)

- Question 2: Gives options (a through h)

- Question 3: Suddenly just asks “Which AI models/providers should the platform support?” with no options

- Question 4: Back to options

- Question 5: Mix of some with options, some without

I had to constantly switch between selecting from a list and coming up with my own answers. Cognitive overhead I didn’t need.

GPT-5’s consistency: Every. Single. Question. Had. Options.

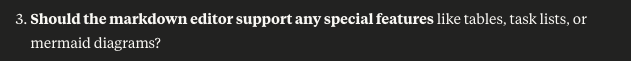

Even for granular details like:

When I wanted something custom, I could always choose that. But 90% of the time, the options covered what I needed.

This consistency meant I could move fast.

See options, pick one, move on.

No context switching between “selection mode” and “creation mode.”

.

.

.

The Persistence Factor: GPT-5 Doesn’t Give Up

Watch what happens when I give minimal answers.

My responses to GPT-5:

1. A 2. B 3. B 4. B

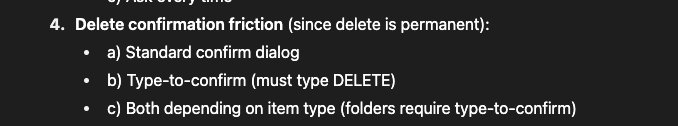

GPT-5’s reaction: Instead of just accepting these and moving on, it dug deeper:

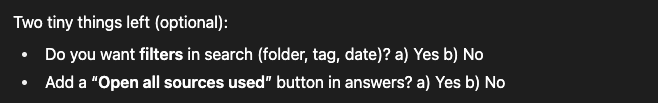

It kept refining until every single detail was captured.

Not annoying persistence – smart persistence. Each follow-up revealed something I hadn’t considered but definitely needed to decide.

Claude’s approach:

After my final responses, Claude asked:

Good questions, but notice: no options provided.

Back to making me generate answers from scratch.

.

.

.

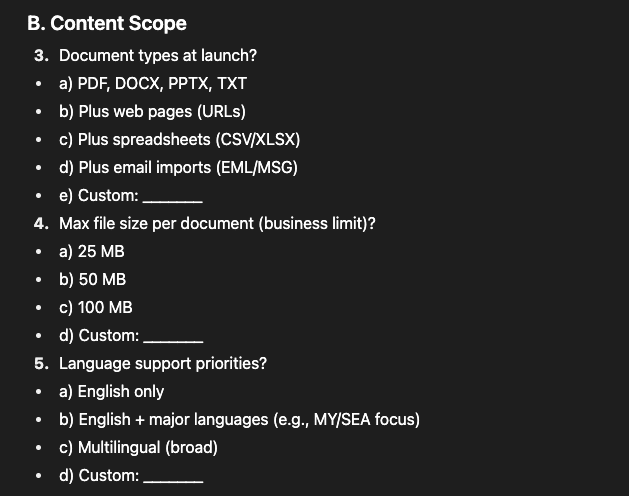

The Organization Difference: Categories vs Chaos

GPT-5’s question organization:

## A. Audience & Use Cases ## B. Content Scope ## C. Collaboration & Sharing ## D. Pricing & Packaging ## E. Branding & UX ## F. Compliance & Security ## G. Differentiators

Each section was a complete thought. Make all your audience decisions, then move to content, then collaboration. Logical flow.

Claude’s organization:

1. Target Audience & Use Case 2. Document Types & Formats 3. AI Model Integration 4. Document Organization 5. Pricing/Access Model 6. Collaboration Features

Looks similar? It’s not. Claude jumps from use case to formats to AI models back to organization. There’s no coherent flow. You’re constantly context-switching between different mental models.

.

.

.

The Completeness Check: 50+ Decisions vs Hope

By the end of the GPT-5 session, I had made over 50 explicit decisions about my product.

Every decision was:

- Presented with context

- Given multiple options

- Validated against other decisions

- Refined if there were conflicts

With Claude, I made about 30 decisions, and several critical areas were just… assumed.

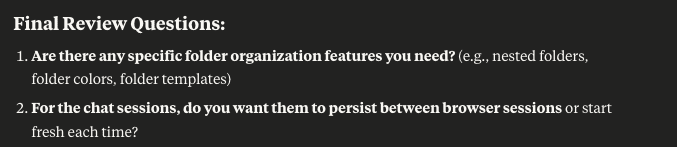

Example: Session persistence. GPT-5 asked about it upfront. Claude only asked at the very end, almost as an afterthought. But this is a fundamental architectural decision that affects everything from the database schema to the user experience.

.

.

.

The Verdict: It’s Not Even a Competition

Look, Claude 4 Opus is good. If GPT-5 thinking mode didn’t exist, I’d still be happy using Claude for PRDs.

But GPT-5 thinking mode is exceptional.

It’s the difference between:

- A helpful assistant vs. a senior product manager

- Getting most requirements vs. getting ALL requirements

- Good enough vs. production-ready

- Hoping you caught everything vs. knowing you caught everything

.

.

.

How to Maximize GPT-5 Thinking Mode for PRDs

1. Answer everything

Even if a question seems minor, answer it. GPT-5 asks for a reason. That “minor” decision about folder colors? It affects the entire information architecture.

2. Choose from options (mostly)

Unless you have a specific custom need, pick from the provided options. They’re thoughtfully crafted to cover 95% of use cases.

3. Review the categories

GPT-5’s categorization isn’t random. Each category represents a complete area of your product. Make sure you’ve thought through each one.

4. Embrace the follow-ups

Those “tiny things left” aren’t GPT-5 being pedantic. They’re the difference between a good PRD and a great one.

.

.

.

Your Next PRD

Here’s my challenge to you:

Take that product idea you’ve been sitting on. The one that feels too complex to properly define.

- Use the exact prompt template from my previous post

- Run it through GPT-5 thinking mode

- Answer every question it asks

- Watch as your vague idea transforms into a production-ready PRD

The difference isn’t subtle. It’s transformative.

GPT-5 thinking mode doesn’t just help you write a PRD. It forces you to make every decision your product needs. Before you write a single line of code.

That’s not just better requirements gathering.

That’s better product development.

P.S. – I ran 5 different projects through both AIs. GPT-5 caught an average of 3x more edge cases and required 50% more decisions. Every additional decision was something I would have discovered the hard way during development. The time investment in answering GPT-5’s questions is nothing compared to the time saved not refactoring later.

Leave a Comment